Inbound Connection Flooder Down (LinkingLion)

Wednesday, November 16, 2022Over the past few months, I’ve repeatedly observed very short-lived P2P connections with fake user agents being made to my Bitcoin Core node in a high succession. This morning around 7:00 am UTC, these abruptly stopped.

Update 2023-03-28: I’ve inspected the behavior of these connections in detail and wrote about it in LinkingLion: An entity linking Bitcoin transactions to IPs?. The frequent connections and evictions reported here only happen when my inbound connection slots are full.

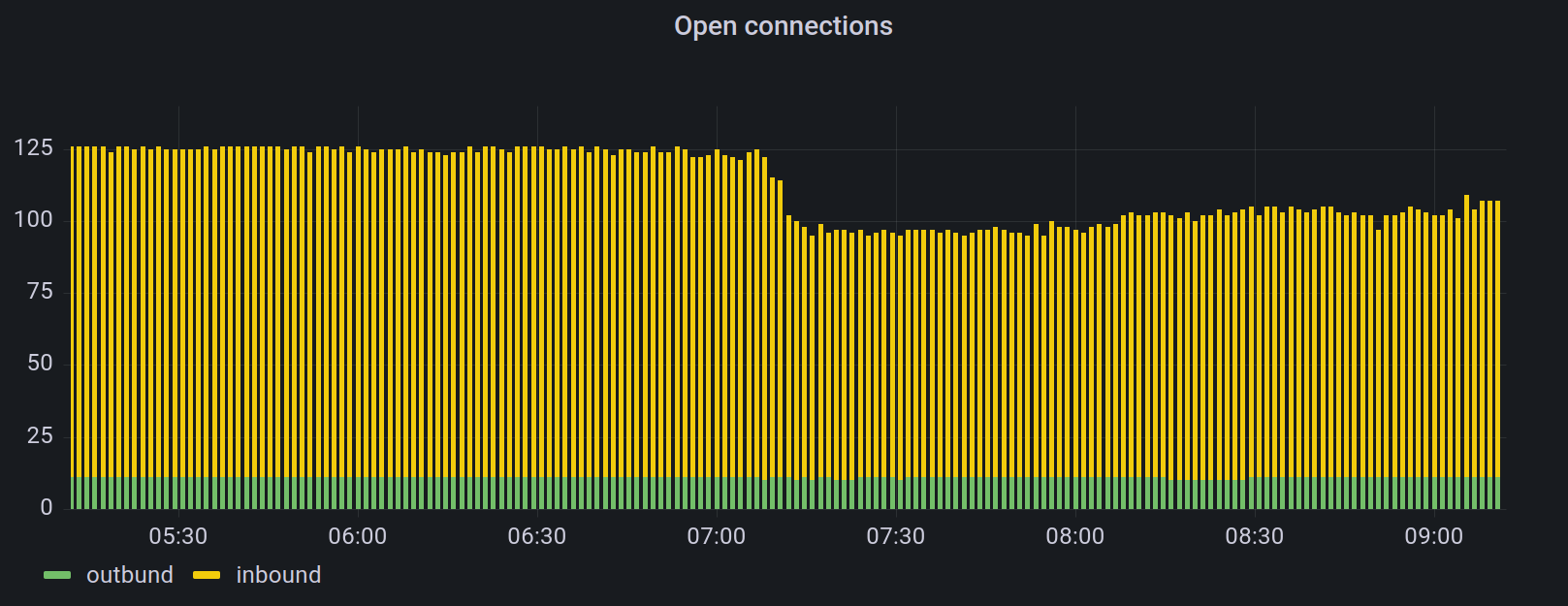

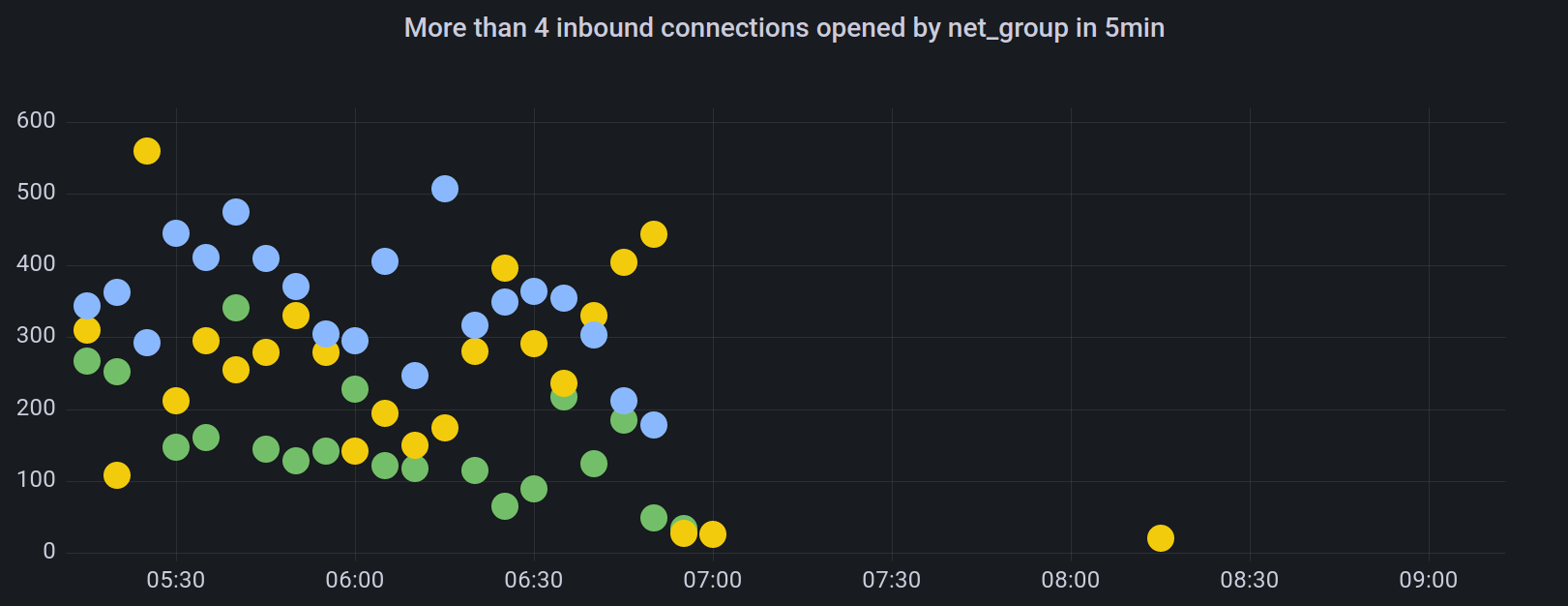

This morning, I noticed a sudden drop in inbound connections to one of my nodes monitored by a P2P monitoring tool I’ve been working on. The number of inbound connections dropped from 115 (all inbound connection slots used) to 85 in about 10 minutes. Drops in inbound connections could be caused by an incident at a cloud service provider like AWS, Google, Hetzner, or similar. However, I didn’t find any incident reports on relevant status pages.

Looking into it, I’ve also noticed a significant drop in new inbound connections

per minute from a relatively high number of 40 to a more normal level of about

three new inbound connections per minute. As my node’s connection slots are

limited to the default 125 connections and 10 are by default used as outgoing

connections, most of these 40 inbound connections per minute caused another

connection to be evicted1. Subsequently, the number of evicted and closed

connections dropped after 7:00 am UTC too. The number of inbound connections

per net_group2 reveals that the new connections primarily originated from

three net_groups.

These newly opened inbound connections sent version message with rather uncommon

user agents (some listed below). The user agents all appear with about the same

frequency indicating that these are just randomly picked from a list. With

slightly more effort, the entity opening these connections could have picked out

of a more realistic distribution of currently used user agents. This would have

made them a bit less obvious. After 7:00 am UTC, the version messages mainly

contained the expected /Satoshi:23.0.0/, /Satoshi:22.0.0/, and an occasional

seed node scraper or bitnodes user agent.

/bitcoinj:0.14.3/Bitcoin Wallet:1.0.5/

/Satoshi:0.16.0/

/Satoshi:0.8.2.2/

/breadwallet:1.3.5/

/bitcoinj:0.14.3/Bitcoin Wallet:4.72/

/Satoshi:0.12.1(bitcore)/

/bitcoinj:0.14.4/Bitcoin Wallet:5.21-blackberry/

/Satoshi:0.10.0/

/Satoshi:0.15.0/

/bitcoinj:0.14.5/Bitcoin Wallet:5.38/

/Satoshi:0.11.1/

/breadwallet:0.6.2/

/bitcoinj:0.14.5/Bitcoin Wallet:5.35/

/bitcoinj:0.14.4/Bitcoin Wallet:5.22/

/Satoshi:0.14.2/UASF-Segwit:0.3(BIP148)/

/breadwallet:0.6.4/

/Satoshi:0.15.99/

/Satoshi:0.16.1/

/Satoshi:0.8.5/

/bitcoinj:0.13.3/MultiBitHD:0.4.1/

/Satoshi:0.12.1/

/Satoshi:0.14.2(UASF-SegWit-BIP148)/

/bitcoinj:0.14.4/Bitcoin Wallet:5.25/

/bitcoinj:0.14.3/Bitcoin Wallet:4.58.1-btcx/

/breadwallet:0.6.5/

/Bitcoin ABC:0.16.1(EB8.0)/

...

I’ve seen these connections by this entity as early as June this year. However, it might have been active before. Back then, I briefly recorded the Bitcoin P2P traffic between one of the IP addresses these connections were opened from and my node. The IP addresses belong to a US-based VPN service called CastleVPN3 and a hosting provider called Fork Networking. Both are likely products by the same company. It’s unclear to me if the connections are being passed through the VPN or if then originate from a server hosted by Fork Networking. A typical P2P message exchange between the entity (them) and my Bitcoin Core node (us) looked as follows.

In the received version message, the protocol version of 70015 and the user

agent /Satoshi:1.0.5/ clearly mismatch. The nonce, typically used to detect if

we’re connecting to ourselves, is always 0, only the service bit for

NODE_NETWORK is set, and the starting height is set ten blocks into the future.

I’ve observed both positive and negative deltas for the starting height. During

the communication, we don’t reveal a lot of information to the entity opening the

connection. However, by sending the getheaders message, we inform the entity

about which headers we know and which block we consider as chain tip. I found it

interesting that they even respond to our ping. The connection stays open until

it reaches a timeout or we evict the peer.

I don’t know the goal of the entity opening the connections. They learn that

there’s a listening Bitcoin node behind this IP address. However, that’s not

information you’d need to query more than 40 times a minute. They also learn

about which chain tip we currently consider valid via the getheaders message

we send them, which is interesting if you are someone monitoring the network for

reorgs. However, you would be fine with way fewer connections for this too.

Someone interested in monitoring block propagation would favor long-lived

connections to learn about the blocks by us notifying them.

If your goal is to block inbound connection slots on listening Bitcoin Core nodes, then you can reach this with this technique. My node’s 30 newly free inbound connection slots have been filling up with (hopefully) good peers over the day. Additionally, by constantly opening new inbound connections, you can evict some good inbound connections before they can send you a block or transaction that would protect them. Since the eviction logic also considers other heuristics to protect our peers, an attacker can only trick us into evicting only some of our peers.

I wonder if the entity behind this is actually malicious. There is always the possibility that anomalies stem from some misconfigured academic measurements. The entity doesn’t seem too sophisticated given that it, for example, picks uncommon user agents with the same frequency as commonly used user agents.

A simple protection against this is banning these IP addresses from opening connections with your Bitcoin Core node. There have been so-called banlists containing known spy nodes, mass connectors, and other abusive nodes in the past. While I don’t know about any currently maintained banlists, it should be possible to create a new banlist based on data collected with my P2P monitoring project.

I’d like to hear from other node operators if they observed a similar drop in

inbound connections this morning around 7:00 am UTC. You can also check what

peer ids are reported by getpeerinfo RPC. My node has currently seen over a

million peers. Also, if you are interested in this topic, I’m currently

proposing a change to Bitcoin

Core that adds the tracepoints I’m using for opened, closed, evicted, and

misbehaving connections. I’m always happy about more testing and review.

Update 2022-11-18: I’ve observed another drop in inbound connections at my node. However, the inbound flooding seems to have started up again. Other Bitcoin developers reported seeing many inbound connections being opened to their node too. Some reported also seeing these connections from the same three /24 IPv4 subnets owned by Fork Networking. Based on the leasing price of $256/m per /24 subnet, it can be assumed that this costs the entity at least $768 per month not including server hosting fees.

The Bitcoin Core connection eviction logic protects nodes that, for example, provided useful information about blocks and transactions to us. For more information about the eviction logic in Bitcoin Core, see, for example, https://bitcoincore.reviews/16756 and https://bitcoincore.reviews/20477. ↩︎

In Bitcoin Core, a

net_groupof an IPv4 address is currently defined as the /16 subnet (first two tuples of an IPv4 address; netmask 255.255.0.0). This might be superseded withasmapin the future. ↩︎It seems their VPN is so secure that they don’t even see the need to use TLS on their website… http://www.castlevpn.com/ ↩︎

My open-source work is currently funded by an OpenSats LTS grant. You can learn more about my funding and how to support my work on my funding page.

Text and images on this page are licensed under the Creative Commons Attribution-ShareAlike 4.0 International License

Text and images on this page are licensed under the Creative Commons Attribution-ShareAlike 4.0 International License